The rapidly advancing field of artificial intelligence presents a myriad of ethical challenges that demand our attention. As AI systems become increasingly integrated into various aspects of our lives, from healthcare to finance, and education to security, it is crucial to examine the ethical implications surrounding these technologies. By addressing these concerns, we enable responsible AI development and deployment that respects human values and cultivates a secure, fair, and transparent future.

Understanding AI and Ethics

Table of Contents

- 1 What is Artificial intelligence (AI)?

- 2 Ethics in AI

- 3 Enhancing Accountability and Responsibility in AI

- 4 Transparency in AI Systems

- 5 Interdisciplinary Collaboration and Public Opinion

- 6 Fostering Responsible Development and Deployment of AI

- 7 Government Regulation

- 8 International Standards

- 9 Self-Regulation by Companies

- 10 Multi-Stakeholder Collaboration

What is Artificial intelligence (AI)?

Artificial intelligence (AI) refers to the development of computer systems that can perform tasks that typically require human intelligence, such as problem-solving, reasoning, perception, and understanding natural language. It has become a significant part of daily life, influencing sectors like finance, healthcare, transportation, and entertainment.

Ethics in AI

Ethics in AI refers to the study and application of moral principles in the development, deployment, and governance of AI systems. It aims to ensure that AI technologies are designed and implemented in a manner that respects human values, minimizes harm, and maximizes benefits. Key ethical concerns surrounding AI include privacy, fairness, transparency, security, and accountability. Striking a balance between potential benefits and potential risks is a critical ethical concern.

Privacy

Ensuring that AI systems do not inadvertently magnify societal biases or contribute to privacy violations is a crucial ethical consideration for developers and policymakers.

Fairness & transparency

AI decision-making processes must be understandable and justifiable to humans, particularly in high-stake situations like medical diagnosis or criminal justice. Ensuring explainability requires collaboration to create systems that can provide meaningful insights into their decision-making processes.

Accountability in Artificial Intelligence Ethics

As AI systems become increasingly integrated into various sectors, establishing guidelines for assigning responsibility and liability in cases of AI-system-induced damages is a complex issue that requires input from developers, policymakers, and regulatory bodies.

AI Bias and Discrimination

Recent years have seen remarkable strides in artificial intelligence (AI), revolutionizing industries such as finance, healthcare, and security by providing valuable insights for decision-making. While AI has the potential to vastly improve lives, it is important to recognize that bias and discrimination within these systems can undermine their benefits.

AI bias is the systematic error in a model’s predictions because of underlying prejudices in the training data or algorithm. When AI systems exhibit bias, they can produce discriminatory results that unfairly target specific groups, perpetuating existing social inequities and discrimination.

The origins of bias in AI systems can be traced back to the data used to train them and the developers who create these algorithms.

The manifestation of bias and discrimination in AI algorithms can have severe consequences, particularly for vulnerable or underrepresented groups.

Addressing these ethical concerns necessitates concerted efforts among AI developers, researchers, policymakers, and other stakeholders to improve transparency, fairness, and inclusivity in AI systems.

A holistic approach involving ethical guidelines, data auditing, and robust fairness metrics can help identify and correct biases in AI algorithms.

Additionally, efforts to increase diversity among AI developers and engage ethicists in the design and development of AI systems can contribute to mitigating the risk of bias and discrimination.

Undoubtedly, the full potential of artificial intelligence can only be harnessed by committing to the implementation of ethical principles in AI development. Emphasizing fairness, transparency, and accountability is critical for the responsible implementation and enduring success of AI technologies.

To achieve equitable outcomes and build public trust in these transformative technologies, it is essential to ensure AI systems are free from bias and discrimination.

Privacy and Surveillance

As AI technology continues its rapid evolution, privacy and surveillance concerns have emerged as primary ethical issues for both developers and users. AI-powered tools possess the ability to collect, store, and analyze immense amounts of data, making the potential invasion of individual privacy and intensification of surveillance efforts a significant concern.

One vital aspect of ethical AI involves the development and application of these technologies in a manner that respects users’ privacy rights, maintains transparency, and minimizes potential harm.

One of the significant risks associated with AI-driven data collection is the unauthorized sharing or misuse of personal information.

This content can range from demographic details to sensitive biometric data such as facial recognition patterns. The implications of AI-enabled surveillance technologies are broad and far-reaching, having direct and indirect effects on individuals, communities, and society.

For instance, unregulated data collection might be used to support discriminatory practices, influence political outcomes, or enable identity theft. To mitigate these concerns, it is critical that developers build stringent data protection measures into AI systems and ensure that data collection, storage, and analysis are conducted with appropriate consent and oversight.

AI can inadvertently contribute to the erosion of privacy through the aggregation and analysis of supposedly anonymous data. When individual data points are combined, patterns and insights may emerge that could identify specific users, even in the absence of their personal information. This risk is notable in applications like smart city infrastructure or advertising, wherein multiple data sources may come together to generate a comprehensive profile of a given individual.

It is vital to ensure that AI systems are designed and deployed in ways that prevent unintended consequences, including violations of personal privacy.

Moreover, ethics in AI must be a central concern when assessing the societal implications of surveillance technologies. Surveillance tools may disproportionately affect marginalized communities, potentially exacerbating existing social inequalities and posing threats to fundamental human rights. Ethical guidelines for AI development should incorporate principles that promote fairness, accountability, and transparency, addressing potential biases and reinforcing a commitment to human dignity and autonomy.

In order to ensure the responsible use of AI-driven surveillance technologies, collaboration is required between AI developers, governments, and various stakeholders to develop robust frameworks and policies that prioritize privacy protection.

Ethical considerations must be embedded throughout the entire life cycle of AI systems. Addressing the potential risks associated with AI and privacy involves engaging in continuous dialogue, vigilance, and a shared commitment to uphold the rights and well-being of individuals within an increasingly data-driven society.

AI Accountability and Responsibility

As we strive to uphold these ethical considerations, AI accountability and responsibility emerge as critical dimensions that must be considered in the development and implementation of artificial intelligence systems. One primary concern is assigning responsibility when AI systems malfunction or produce unintended consequences.

As these systems become more complex and autonomous, pinpointing the responsible parties for errors, malfunctions, or negative societal impacts becomes increasingly challenging. This raises important legal and ethical questions that need to be addressed since traditional frameworks may not suffice for the unique nuances of AI systems.

Enhancing Accountability and Responsibility in AI

One approach to enhancing accountability and responsibility in AI is to introduce legislation that explicitly outlines when and how AI developers, owners, and users can be held liable for the actions of their systems. Lawmakers and regulators need to develop malleable policies that can adapt to rapid technological advancements and adequately respond to the evolving AI landscape.

Furthermore, as AI systems often learn from the data they are provided, their creators must ensure that unbiased and ethical data are used to train these systems. As such, AI developers have a moral and legal duty to closely monitor their systems and continuously address any discriminatory or harmful behaviors that may arise.

Transparency in AI Systems

Another aspect of AI accountability and responsibility involves the need for transparency in AI systems. In order for stakeholders to understand how AI systems arrive at their conclusions or make decisions, it is crucial for developers to make these processes more explicit and understandable.

Explainable AI can help address this challenge by providing insights into the inner workings of complex algorithms and systems, fostering trust and enabling better-informed decisions on the implementation and regulation of AI technologies.

In turn, this transparency can help create a sense of shared responsibility among all parties involved in the AI lifecycle, from developers to end-users.

Interdisciplinary Collaboration and Public Opinion

To further explore the ethical and legal perspectives of AI accountability and responsibility, it is essential to consider the different stakeholders who may be affected by AI systems. An interdisciplinary approach should be taken, wherein ethicists, lawmakers, computer scientists, and industry leaders collaborate to establish best practices and guidelines for AI technologies.

Integrating public opinion and consensus-driven policymaking can help create a broader sense of responsibility and ownership when it comes to the governance of AI systems. By involving the public in AI-related decisions and policy development, it is more likely that the technology will be ethically aligned with societal values.

Fostering Responsible Development and Deployment of AI

Addressing the accountability and responsibility challenges in AI calls for a collaborative effort from a wide range of individuals and institutions, spanning fields such as law, policy, technology, and education. By working together, we can cultivate an environment where AI technologies are developed and deployed responsibly, ultimately benefiting society as a whole.

AI Transparency and Explainability

Central to the subject of ethics in artificial intelligence is the importance of transparency and explainability. The ‘black box’ problem pertains to the often opaque nature of AI systems, which can make their decision-making processes difficult to comprehend.

This lack of clarity has the potential to create distrust and reluctance in adopting AI technology, thus hindering its overall positive impact. By advocating for AI transparency and explainability, we can establish trust and acceptance among users and the general public, allowing for a smoother integration of AI into various aspects of our lives.

One approach to enhancing AI transparency is implementing clear, concise documentation for AI algorithms and systems, detailing the input data, processes, and output decisions. This would help users comprehend the mechanics behind AI-driven decisions and results.

Additionally, developing open-source AI platforms encourages the AI community to contribute to the creation, review, and improvement of these systems by sharing knowledge and experiences. This collective approach also accelerates ethical AI development, allowing professionals and hobbyists alike to learn from one another.

Another important aspect of AI explainability is developing interpretable AI models, where users and analysts can comprehend the reasoning behind the AI’s predictions. By allowing humans to interpret and analyze the AI’s decision-making, it becomes easier to identify and rectify biases and other ethical concerns.

Explainable AI models not only aid developers in refining their systems by identifying potential flaws but also help users feel confident in the AI systems they choose to employ.

Furthermore, involving multidisciplinary teams in the AI development process can improve transparency and explainability.

Combining the expertise of different domains, such as computer science, psychology, philosophy, and other social sciences, helps bridge the gap between AI systems and human cognition. Creating diverse, inclusive teams with varied perspectives ensures the integration of ethical considerations in AI design, thereby making AI systems more transparent and understandable.

AI transparency and explainability are crucial to ensure that artificial intelligence systems we create and utilize adhere to ethical standards.

By focusing on these aspects, we can foster a robust AI ecosystem that not only promotes efficiency but also respects the values and needs of individual users, communities, and society as a whole.

As a result, addressing and overcoming the ‘black box’ problem is the key to unlocking AI technology’s full potential ethically and responsibly.

Ethical AI Design and Development

To guarantee ethical AI design and development, adopting an interdisciplinary approach is essential. This means incorporating diverse perspectives from fields like social sciences, humanities, and ethics, in addition to traditional computer science and engineering disciplines.

Integrating these various viewpoints in AI development provides a comprehensive understanding of the possible ethical implications and societal impacts arising from AI technologies.

Moreover, this approach encourages the creation of AI systems that are not only technologically advanced, but also sensitive to cultural, ethical, and social concerns, fostering a seamless connection between AI system values and their real-world applications.

Involving stakeholders at every step of AI design and development is another essential strategy in ensuring ethical AI. This entails engaging with the end-users, organizations, regulatory bodies, and the wider community affected by AI systems.

By consulting with stakeholders, designers and developers can obtain invaluable insights into how AI may impact different groups, identify potential biases and risks, and foster transparency and trust in AI technologies.

By creating an ongoing dialogue with stakeholders, AI developers can continuously refine their systems to minimize any unintended consequences, ensure fairness, and promote social good.

Adhering to best practices in AI design and development also involves setting ethical guidelines and frameworks that can steer the trajectory of AI technologies towards responsible applications. This includes developing guidelines that promote transparency, accountability, explainability, and fairness in AI systems.

Additionally, it entails creating evaluation criteria for AI algorithms and tools to assess their performance based on ethical factors, and continuously monitoring the technology’s deployment and impact on society.

By integrating ethical considerations into the AI development process, designers and developers can create AI systems that align with broader societal values.

Privacy and data protection are also crucial ethical concerns in AI development. Understanding the implications of data collection, processing, storage and sharing is essential for ethically designing AI systems.

Developers should adopt privacy-by-design principles, ensuring that data privacy considerations are integrated throughout the AI development process.

This involves not only meeting legal requirements for data protection but also respecting the fundamental rights and expectations of individuals whose data is being used within AI systems.

Last but not least, promoting continuous learning and adaptation in ethical AI design and development is essential. Considering that AI technologies are rapidly advancing and societal values are constantly evolving, developers must maintain an agile approach and regularly reflect on the ethical implications of their creations.

To achieve this, education and training opportunities should be provided for AI developers to stay updated on the latest ethical standards and best practices. This will help create AI systems that maximize benefits for society while minimizing potential harm.

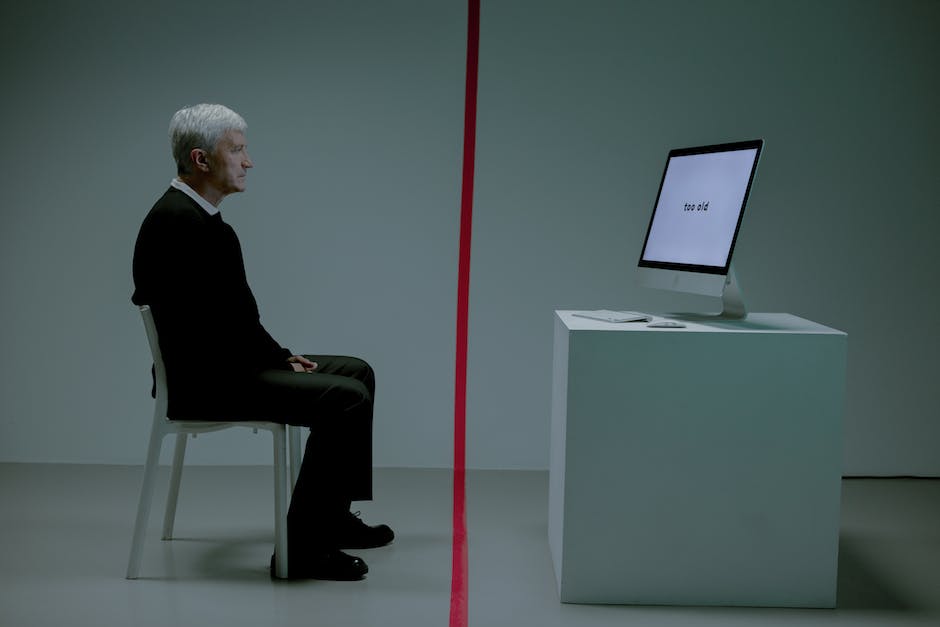

AI and Job Automation

For instance, artificial intelligence (AI) is transforming the landscape of job automation – dramatically changing the way people work and the roles they fill in various industries. As technological advancements continue to progress, AI’s potential to automate numerous tasks that were traditionally performed by humans grows increasingly apparent.

Job automation brought by AI is indeed disruptive, affecting diverse industries including manufacturing, agriculture, healthcare, and finance, to name a few. While automation may increase productivity and efficiency, it might also disproportionately displace workers in jobs requiring repetitive or predictable tasks, creating concerns about unemployment, income inequality, and social unrest.

The ethical implications here are complex, as weighing the benefits of AI-driven progress against the potential harm to individual workers and vulnerable communities necessitates deep introspection and consideration from different stakeholders, including policymakers, educators, and business leaders.

To minimize the negative consequences of AI on job automation, reskilling and adaptation are crucial. Education and vocational training programs must evolve in a manner that not only equips individuals with technical knowledge about AI but also fosters creativity, critical thinking, and emotional intelligence.

These skill sets are less likely to be replaced by AI and are essential in fostering an adaptable workforce, mitigating the risk of massive unemployment due to automation. It is ethically imperative for governments and organizations to invest in such programs so that current and future generations can enjoy equal opportunities for meaningful employment and personal growth.

Aside from individual reskilling, ethical AI development should also focus on augmenting and enhancing human capabilities, rather than solely automating human tasks. This human-centered approach to AI can promote the creation of machines that empower humans to make better decisions, solve complex problems more efficiently, and improve their overall quality of life.

By focusing on how AI can complement human strengths and alleviate weaknesses, AI and job automation can be better aligned with ethical considerations, promoting both technological advancement and social good.

Transparency and regulation play a critical role in addressing the ethical implications of AI and job automation. It is crucial for governments and organizations to establish clear policies, standards, and guidelines that ensure AI systems are designed and deployed with ethical considerations in mind.

Engaging in public discourse and involving diverse stakeholders is essential in forming policies that strike a balance between innovation and social responsibility. By promoting the ethical use of AI and fostering collaboration among stakeholders, we can work towards a more equitable, sustainable, and prosperous future for all members of society, despite the rapid advancements in AI and automation.

AI Regulation and Governance

The widespread adoption of artificial intelligence (AI) across various sectors has sparked ethical concerns surrounding privacy, fairness, transparency, and accountability. Addressing these concerns and guaranteeing ethical behavior in AI development requires robust regulation and governance frameworks.

AI regulation can be implemented at multiple levels, including governmental, international, and corporate self-regulation. Combining these approaches will encourage responsible AI development, enhance public trust, and help prevent the potential misuse of AI technologies.

Government Regulation

Governments play a crucial role in regulating AI to safeguard citizens’ rights and ensure ethical practices. They can develop national AI strategies with explicit ethical guidelines, set up dedicated regulatory bodies to oversee the implementation of AI policies, and enact legislation targeting specific AI use cases.

For instance, in the European Union, a proposed AI regulatory framework aims to set strict standards for high-risk AI systems, such as facial recognition and biometric identification technologies, to protect citizens’ privacy and civil liberties. By enforcing AI regulations, governments can facilitate the responsible development and deployment of AI technologies in the public and private sectors.

International Standards

International bodies, such as the United Nations and the Organization for Economic Cooperation and Development (OECD), can contribute to establishing global AI ethics standards.

These organizations can develop guidelines, such as the OECD’s AI Principles, which outline ethical values that AI systems should uphold, including respect for human rights, fairness, transparency, and explainability. By harmonizing AI regulations across countries, international cooperation can lead to consistent ethical standards for AI developers and users, ensuring ethical considerations are maintained worldwide.

Self-Regulation by Companies

Self-regulation by companies is another aspect of AI governance that promotes ethical behavior. Tech giants, like Google, Microsoft, and IBM, have established their own AI ethics principles to guide their development and deployment of AI technologies.

These principles often include transparency, fairness, accountability, and privacy protection. Companies can also appoint ethics boards, create transparency reports, and engage in third-party audits to demonstrate their commitment to ethical AI practices.

Additionally, implementing AI ethics guidelines at the organizational level can cultivate a culture of responsibility and accountability among employees.

Multi-Stakeholder Collaboration

Another approach to improve AI ethics is to encourage multi-stakeholder collaboration, including academia, civil society, and the user community. By involving diverse actors in the conversation, a more comprehensive and inclusive understanding of potential ethical risks can be achieved.

This collaboration can contribute to the development of best practices, tools, and methods for assessing and monitoring AI systems’ ethical behavior.

Establishing a shared understanding of AI ethics and fostering collaboration across various sectors can facilitate the responsible growth and deployment of AI applications in society.

Ultimately, addressing the ethical challenges posed by artificial intelligence is a collective responsibility that requires the involvement of multiple stakeholders, such as governments, companies, academia, and the general public.

By fostering an interdisciplinary approach that encompasses various perspectives, we can anticipate potential issues, formulate effective regulations, and incorporate ethical principles into the design and development of AI systems.

As we continue to navigate this rapidly evolving technological landscape, our focus must remain on creating AI solutions that align with human values and promote the greater good for all.

I’m Dave, a passionate advocate and follower of all things AI. I am captivated by the marvels of artificial intelligence and how it continues to revolutionize our world every single day.

My fascination extends across the entire AI spectrum, but I have a special place in my heart for AgentGPT and AutoGPT. I am consistently amazed by the power and versatility of these tools, and I believe they hold the key to transforming how we interact with information and each other.

As I continue my journey in the vast world of AI, I look forward to exploring the ever-evolving capabilities of these technologies and sharing my insights and learnings with all of you. So let’s dive deep into the realm of AI together, and discover the limitless possibilities it offers!

Interests: Artificial Intelligence, AgentGPT, AutoGPT, Machine Learning, Natural Language Processing, Deep Learning, Conversational AI.